I''ve found that one of our PI points was mis-configured (no compression or exception settings configured) and as a result I have data values every second, sometimes two values every second in the archives. When people try to retrieve data for large time periods the query will fail as the number of records is too great (we currently have the limit set to 5 million, which at 1 second scan rates equates to about 56 days of data).

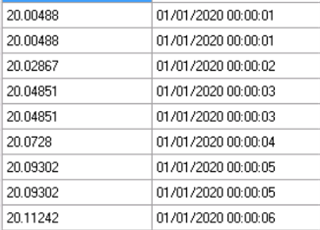

Is there a way to retrospectively apply compression settings to clean out the unwanted events from the archive? Can you re-process the archive to clean out the second value where there are 2 values in a 1 second period (this shows up as duplicate values for the same timestamp - see screenshot below).

Thanks

Col